- Published on

Mapping Worlds into Graphs with Qdrant, Neo4j, RF-DETR, BLIP-2 and Kung Fu

- Authors

- Name

- Athos Georgiou

In my previous adventures, I've explored ways to make retrieval systems more context-aware, first by combining vector search with knowledge graphs and then by building a dynamic ontology for NLP. But all this time, I've been working with static text data. What if I could extend this approach to generate datasets from video, which I can then use to build knowledge graphs?

The Guardian Awakens

While trying to understand more about how graph-based retrieval systems work, I kept bumping into one fundamental question: where does this data come from in the first place? It doesn't magically show up as ready-to-ingest text, right?

Text extraction from documents and web pages is relatively straightforward, but what about the vast amount of information locked in visual media? Security footage, drone surveys, traffic monitoring, retail analytics—these represent enormous untapped potential for knowledge graphs, but they require solving two key challenges:

- Object Detection & Tracking - Identifying entities and following them across time

- Entity Disambiguation - Understanding when the same object reappears and resolving relationships between entities

This is where I realized there was an opportunity to complete the loop. If we could reliably extract structured data from visual input, we could feed that directly into a graph, creating a comprehensive pipeline from raw video all the way to context-aware retrieval.

So, I did what I do best: sacrifice time watching 'Friends' with my wife to instead search for solutions to problems that don't exist... yet. Or, do they? They do now. So, I'm not sure who's worse off. Right? Right???

Honestly, all joking aside, we've been riding the LLM hype train for a while now. It's time to start getting serious. If we are to scale, LLMs are not the answer for everything. Encoder-decoder models are. Why? Because they are fast, efficient, and incredibly specialized.

The Power of Encoder-Decoder Models

After researching various object detection and image description models, I decided to experiment with the following:

- RF-DETR (Roboflow Detection Transformer) - A state-of-the-art real-time object detection model that outperforms existing models on real-world datasets

- BLIP-2 (Bootstrapping Language-Image Pre-training) - A vision-language model capable of generating detailed descriptions of visual content

You can get them both on huggingface:

To set the record straight, I'm aware that BLIP-2 is not just an encoder model. It consists of 3 models: a CLIP-like image encoder, a Querying Transformer (Q-Former), and a large language model. It is quite unique in what it does:

- The weights of the image encoder and large language model are initialized from pre-trained checkpoints and kept frozen while training the Querying Transformer. The BERT-like Transformer encoder maps a set of "query tokens" to query embeddings, which bridge the gap between the embedding space of the image encoder and the large language model.

Let's dive into how this approach can be used to extract structured data from video.

Annotation

The annotation pipeline consists of several key components working together:

1. Object Detection with RF-DETR

RF-DETR is a cutting-edge object detection model that uses a transformer-based architecture. What makes it special is its ability to accurately identify objects even in challenging real-world conditions. The model takes individual video frames as input and outputs bounding boxes and class labels for detected objects.

from rf_detr_runner import RFDETRRunner

# Initialize the model

rf_detr = RFDETRRunner(

detection_threshold=0.5,

model_type="rf_detr",

device="cuda" if torch.cuda.is_available() else "cpu"

)

# Process a frame

detections = rf_detr.process_frame(frame)

The output provides us with the locations and basic classifications of objects in the frame, but it doesn't give us rich semantic descriptions or understand the relationships between these entities.

2. Entity Description with BLIP-2

This is where BLIP-2 comes in. For each detected object, we crop the corresponding region from the frame and pass it to BLIP-2, which generates a natural language description of the entity.

from blip2_describer import BLIP2Describer

# Initialize BLIP-2

blip2 = BLIP2Describer(device="cuda" if torch.cuda.is_available() else "cpu")

# Generate descriptions for each detected object

descriptions = {}

for detection_id, detection in detections.items():

x1, y1, x2, y2 = detection["bbox"]

object_region = frame[y1:y2, x1:x2]

prompt = f"Describe this {detection['class']}: "

descriptions[detection_id] = blip2.generate_description(object_region, prompt)

Instead of just knowing that "person_1" exists at coordinates (x, y), we now have rich descriptions like "A woman in a red coat walking her dog on a leash." This semantic richness is crucial for building meaningful knowledge graphs.

3. Entity Tracking and Relationship Inference

The most powerful component of the system is the VideoEntityTracker, which maintains persistent identities for objects across frames and infers relationships between them based on their spatial arrangements.

from video_entity_tracker import VideoEntityTracker

# Initialize tracker

tracker = VideoEntityTracker(iou_threshold=0.5, environment="street_scene")

# Process frames sequentially

for frame_id, frame in enumerate(video):

detections = rf_detr.process_frame(frame)

descriptions = blip2.describe_detections(frame, detections)

# Track entities and generate relationships

frame_data = tracker.process_frame(frame_id, detections, descriptions)

# frame_data contains entities, relationships, and deltas (changes since last frame)

The tracker handles several critical tasks:

- Identity Persistence: Using IoU (Intersection over Union) matching to determine when a detection in the current frame corresponds to an entity from previous frames

- Delta Generation: Tracking which entities are new, updated, or removed between frames

- Relationship Inference: Automatically deriving spatial relationships like "next_to," "overlaps," "contains," etc.

4. Structured Dataset Generation

The final output is a JSON dataset that captures the entire semantic content of the video, organized frame by frame:

[

{

"frame_id": 1,

"timestamp": "2025-04-15T09:00:00Z",

"environment": "street_scene",

"entities": [

{

"entity_id": "person_0",

"type": "person",

"bbox": [100, 200, 300, 400],

"confidence": 0.97,

"description": "Woman in a red coat walking a dog on a leash"

},

{

"entity_id": "car_2",

"type": "car",

"bbox": [500, 200, 800, 400],

"confidence": 0.89,

"description": "Blue sedan parked by the curb"

}

],

"relationships": [

{

"subject": "person_0",

"predicate": "next_to",

"object": "car_2"

}

],

"delta": {

"new_entities": ["person_0", "car_2"],

"updated_entities": [],

"removed_entities": []

}

}

// Additional frames...

]

This structured representation can now be used as input for our knowledge graph system.

From Video to Graph: The Integration

Now comes the exciting part: integrating this video annotation system with our existing GraphRAG pipeline. The structured JSON dataset provides all the components we need to build a rich knowledge graph:

Entities become nodes in our Neo4j database, with properties including:

- Type classification (person, car, animal, etc.)

- Visual attributes from BLIP-2 descriptions

- Temporal existence (when they appear and disappear)

- Confidence scores

Relationships become edges with properties like:

- Relationship type (next_to, contains, approaches)

- Temporal validity (when the relationship exists)

- Confidence scores

Frame metadata becomes context that helps us understand the setting and environment

Here's a simplified example of how we can transform the video dataset into a Neo4j graph:

from neo4j import GraphDatabase

class VideoGraphBuilder:

def __init__(self, uri, user, password):

self.driver = GraphDatabase.driver(uri, auth=(user, password))

def ingest_video_dataset(self, dataset_path):

with open(dataset_path, 'r') as f:

dataset = json.load(f)

with self.driver.session() as session:

# Create a Video node

session.run("""

CREATE (v:Video {id: $video_id, environment: $environment})

""", video_id="video_1", environment=dataset[0]["environment"])

# Process frames

for frame_data in dataset:

# Create Frame node

session.run("""

MATCH (v:Video {id: $video_id})

CREATE (f:Frame {id: $frame_id, timestamp: $timestamp})

CREATE (v)-[:CONTAINS_FRAME]->(f)

""", video_id="video_1", frame_id=frame_data["frame_id"],

timestamp=frame_data["timestamp"])

# Create entities

for entity in frame_data["entities"]:

session.run("""

MERGE (e:Entity {id: $entity_id})

ON CREATE SET e.type = $type,

e.first_seen = $frame_id

ON MATCH SET e.last_seen = $frame_id

WITH e

MATCH (f:Frame {id: $frame_id})

CREATE (f)-[:CONTAINS_ENTITY {

bbox: $bbox,

confidence: $confidence,

description: $description

}]->(e)

""", entity_id=entity["entity_id"], type=entity["type"],

frame_id=frame_data["frame_id"], bbox=entity["bbox"],

confidence=entity["confidence"], description=entity["description"])

# Create relationships

for rel in frame_data["relationships"]:

session.run("""

MATCH (s:Entity {id: $subject})

MATCH (o:Entity {id: $object})

MERGE (s)-[r:`FRAME_` + $frame_id + '_' + $predicate]->(o)

SET r.frame_id = $frame_id,

r.predicate = $predicate

""", subject=rel["subject"], object=rel["object"],

frame_id=frame_data["frame_id"], predicate=rel["predicate"])

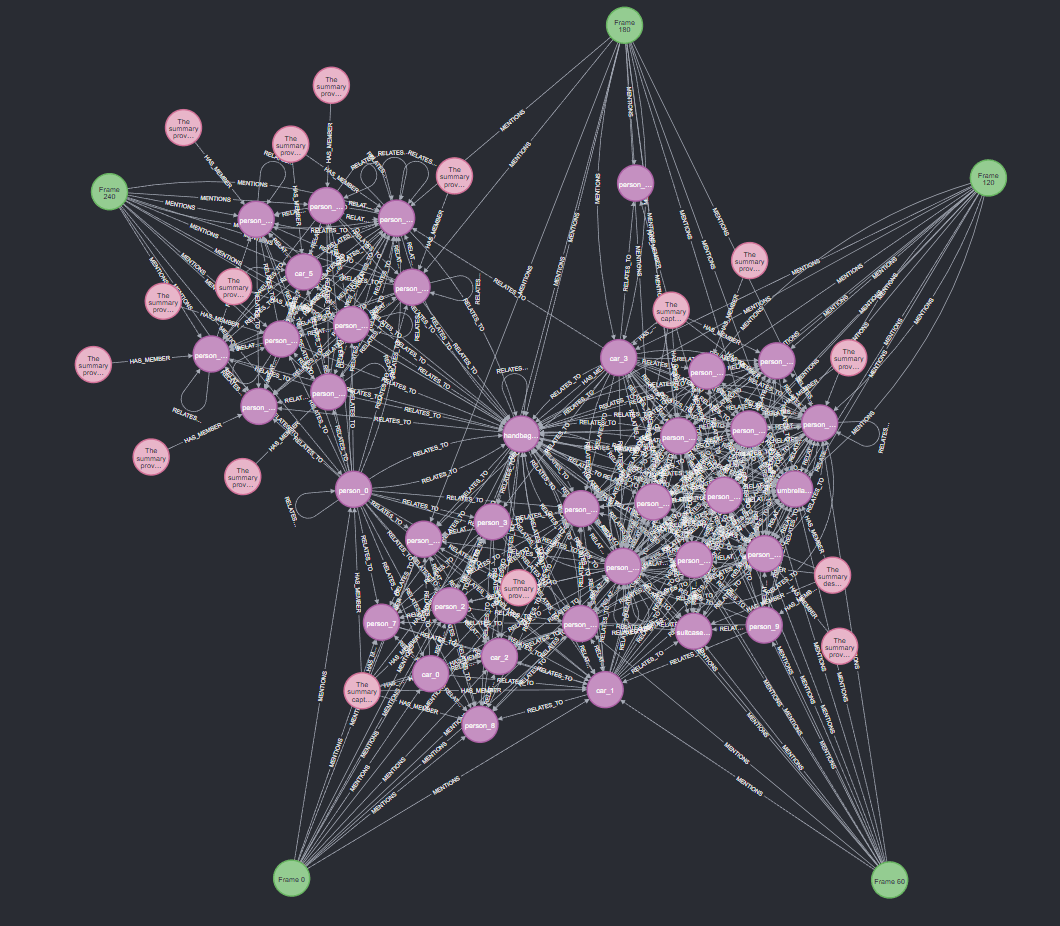

Five Frames of New York City

Here's what the Neo4j graph looks like for 1 FPS and 5 seconds of video from a typical day in New York City. Just as you'd expect:

We can now run queries like:

- "Find all instances where a person approached a vehicle"

- "Show me all objects that appeared and disappeared together"

- "Identify entities that maintained a 'next_to' relationship for more than 5 seconds"

More importantly, we could seamlessly integrate this with an existing GraphRAG pipeline, enabling queries like:

// Find mentions of red cars in documents that relate to streets similar to those

// where our video detected multiple pedestrians

MATCH (d:Document)-[:CONTAINS]->(c:Chunk)

WHERE c.text CONTAINS "red car"

WITH d

MATCH (v:Video {environment: "street_scene"})

MATCH (v)-[:CONTAINS_FRAME]->(f:Frame)

MATCH (f)-[:CONTAINS_ENTITY]->(e:Entity {type: "person"})

WITH f, COUNT(e) as person_count

WHERE person_count > 3

RETURN d.title, f.id

ORDER BY person_count DESC

LIMIT 5

This query combines information from both our document corpus and our video knowledge graph, enabling multimodal retrieval.

Performance and Optimizations

While the system works well, there were several performance challenges to overcome:

1. Processing Speed

Running RF-DETR and BLIP-2 on every frame of high-resolution video is computationally expensive. To address this, I implemented several optimizations:

- Frame Sampling: Processing only 1-5 frames per second instead of every frame, unless you're GPU Rich

- Batch Processing: Running detection and description in batches to leverage GPU parallelism

- Progressive Resolution: Starting with lower-resolution analysis and only using full resolution when needed

2. Entity Tracking Accuracy

Maintaining consistent entity tracking across occlusions and viewpoint changes is challenging. Some strategies that helped:

- Appearance Modeling: Using BLIP-2 descriptions as a fallback when IoU matching fails

- Temporal Smoothing: Incorporating velocity and direction predictions

- Confidence Thresholds: Only claiming entities are the same when confidence exceeds a threshold

Why did I Do This?

Honestly, no idea. I just wanted to see what capabilities encoder-decoder models have. It's by no means production ready, but it's a fun proof of concept of how we can use encoder-decoder models to extract structured data from video and use it to build a knowledge graph.

Future Directions

This little app is just one of many possible exciting possibilities for GraphRAG systems, including multi-modal corpus integration that combines video with other data types, temporal reasoning capabilities for understanding sequences and changes over time, interactive visual querying interfaces that go beyond text-based searches, and potential real-time processing pipelines that continuously update knowledge graphs from streaming video sources.

Try It Yourself

The complete code for the video annotation system is available on GitHub, and my previous posts provide details on setting up the GraphRAG pipeline with Neo4j and Qdrant.

Installation

Clone the repository:

git clone https://github.com/athrael-soju/rf-detr-blip2-object-detection cd rf-detr-blip2-object-detectionInstall dependencies:

pip install -r requirements.txt # For BLIP-2 support, you'll need additional packages: pip install transformers torch Pillow

Usage

Run the main script with various options:

# Basic usage with default parameters

python main.py --input ./input/shinjuku.mp4 --output ./output/video.mp4

# Use BLIP-2 for AI-powered descriptions

python main.py --input ./input/tokyo_15min.mp4 --output ./output/annotated_tokyo.mp4 --blip2

# Generate dataset with entity tracking

python main.py --input ./input/street_scene.mp4 --output ./output/annotated.mp4 --generate-dataset --dataset-output ./output/street_data.json --environment street --blip2 --fps 5 --seconds 30

# Process NYC video at 1 FPS for 5 seconds

python main.py --input ./input/nyc.mp4 --output ./output/annotated.mp4 --generate-dataset --dataset-output ./output/nyc_data.json --environment street --blip2 --fps 1 --seconds 5

Key command-line arguments include:

--fps— Target FPS to process (default: original video FPS)--seconds— How many seconds of video to process (default: all)--blip2— Use BLIP-2 to generate descriptions for detected entities--generate-dataset— Generate a structured dataset with entity tracking--environment— Environment label for the dataset (default: "unknown")--iou-threshold— IoU threshold for entity tracking between frames (default: 0.5)

For more details and additional options, check out the GitHub repository.

Console Output

When running the pipeline, you'll see detailed logging information that helps you monitor progress and performance. Here's an example of what the console output looks like when processing a video with these settings: main.py --input ./input/nyc.mp4 --output ./output/annotated.mp4 --generate-dataset --dataset-output ./output/nyc_data.json --environment street --blip2 --fps 1 --seconds 5

2025-04-30 14:34:54,432 - root - INFO - CUDA is available. Using GPU.

2025-04-30 14:34:54,433 - root - INFO - Starting video processing with the following settings:

2025-04-30 14:34:54,433 - root - INFO - Input: ./input/nyc.mp4

2025-04-30 14:34:54,434 - root - INFO - Output: ./output/annotated.mp4

2025-04-30 14:34:54,434 - root - INFO - Device: cuda

2025-04-30 14:34:54,434 - root - INFO - Detection Threshold: 0.5

2025-04-30 14:34:54,434 - root - INFO - Target FPS: 1.0

2025-04-30 14:34:54,434 - root - INFO - Processing Duration: 5.0s

2025-04-30 14:34:54,434 - root - INFO - Start Time: 0s

2025-04-30 14:34:54,434 - root - INFO - Using BLIP-2 for entity description

2025-04-30 14:34:54,434 - root - INFO - BLIP-2 Prompt: 'Describe this object:'

2025-04-30 14:34:54,434 - root - INFO - Generating dataset with environment: 'street'

2025-04-30 14:34:54,434 - root - INFO - Loading BLIP-2 processor...

2025-04-30 14:34:55,184 - root - INFO - BLIP-2 processor loaded successfully

2025-04-30 14:34:55,184 - root - INFO - Loading BLIP-2 model on cuda...

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:43<00:00, 21.72s/it]

2025-04-30 14:35:39,764 - root - INFO - BLIP-2 model loaded successfully

2025-04-30 14:35:39,777 - root - INFO - BLIP-2 model loaded successfully

2025-04-30 14:35:40,443 - root - INFO - Loaded video: ./input/nyc.mp4 (1080x1920, 60 FPS, 623 frames)

2025-04-30 14:35:40,444 - root - INFO - Processing every 60 frame(s) to achieve ~1.0 FPS

2025-04-30 14:35:40,446 - root - INFO - Processing 5.0 seconds from 0s (frames 0 to 300)

2025-04-30 14:35:49,396 - root - INFO - Frame 0: Detected 13 entities

2025-04-30 14:35:49,396 - root - INFO - Starting to process 13 entities with BLIP-2...

2025-04-30 14:35:49,396 - root - INFO - Processing entity 1/13...

2025-04-30 14:36:03,439 - root - INFO - Entity 1/13 described in 14.04s: a man with a beard and headphones walking down the street

2025-04-30 14:36:03,439 - root - INFO - Processing entity 2/13...

2025-04-30 14:36:05,533 - root - INFO - Entity 2/13 described in 2.09s: a bmw x5

2025-04-30 14:36:05,533 - root - INFO - Processing entity 3/13...

...

2025-04-30 14:39:14,988 - root - INFO - Video entity tracking data saved to ./output/street_data.json with 5 frames

2025-04-30 14:39:14,989 - root - INFO - Dataset saved to ./output/street_data.json

2025-04-30 14:39:14,989 - root - INFO - Processing complete. Output saved to ./output/annotated.mp4

2025-04-30 14:39:14,989 - root - INFO - Total processing time: 260.56s

2025-04-30 14:39:14,989 - root - INFO - Frames processed: 5

2025-04-30 14:39:14,989 - root - INFO - Total entities detected: 69

2025-04-30 14:39:14,989 - root - INFO - Average entities per frame: 13.80

2025-04-30 14:39:14,989 - root - INFO - Average time per frame: 52.11s

2025-04-30 14:39:14,989 - root - INFO - Time breakdown - Detection: 9.03s, Description: 204.29s, Annotation: 0.28s, Dataset Generation: 0.01s

The logs show the detection counts, entity tracking statistics, and relationship generation for each processed frame. You can see how the system dynamically tracks new, updated, and removed entities across frames. At the end, you get useful summary statistics about the processing job.

Annotated Video Generation and Dataset Visualization

Beyond the structured JSON data, the system also generates an annotated video output that visually represents all the detected entities and their relationships. This provides an intuitive way to validate the accuracy of the detection and tracking algorithms.

The project includes a dedicated visualization tool (visualize_dataset.py) that offers comprehensive analytics and insights into your dataset:

# Generate all visualizations for a dataset

python visualize_dataset.py --dataset ./output/street_data.json --output-dir ./output/visualizations --all

# Or generate specific visualizations

python visualize_dataset.py --dataset ./output/street_data.json --timeline --changes --statistics

These visualizations provide valuable insights such as:

- Entity Timeline: Shows when each entity appears and disappears across the video

- Entity Changes: Tracks how the scene evolves frame by frame

- Relationship Graphs: Network graphs showing spatial relationships between entities at specific frames

- Movement Animation: Animated visualization of entity trajectories over time

- Entity Statistics: Charts and statistics about entity types, lifespans, and relationship distributions

Here's a few examples of the visualizations:

- Entity Timeline

- Entity Changes

- General Statistics

These visualizations are just something I threw together to help me understand the data better. They are not part of the main pipeline and are not used in the knowledge graph, but they could provide some insights prior to ingesting the data into the knowledge graph.

What's Next?

The journey down the GraphRAG rabbit hole continues to reveal new depths. By introducing encoder-decoder models for object detection and description, we can create retrieval systems that understand not just the words we write, but the world we live in.

In a future post, I'll demonstrate how to completely replace BLIP-2 with specialized encoder-decoder models to create an even more efficient and accurate retrieval system.

Until next time, happy coding!