- Published on

Integrating multi-user Assistants using the latest OpenAI API

- Authors

- Name

- Athos Georgiou

Integrating multi-user Assistants using the latest OpenAI API

Welcome to the latest instalment of the series on building an AI chat assistant from scratch. In this article, I'll be sharing my experience with integrating multi-user assistants with OpenAI API. It's been a bit of a challenge, because I originally had something much simpler in mind. But now that it's done, I'm pretty happy with the result. This one's for the Scope Creep Gods!

In prior articles, I've covered the following topics:

- Part 1 - Integrating Markdown in Streaming Chat for AI Assistants

- Part 2 - Creating a Customized Input Component in a Streaming AI Chat Assistant Using Material-UI

- Part 3 - Integrating Next-Auth in a Streaming AI Chat Assistant Using Material-UI

Although the the topics are not all inclusive of the entire project, they do provide a good starting point for anyone interested in building their own AI chat assistant and may serve as the foundation for the rest of the series.

If you'd prefer to skip and get the code yourself, you can find it on GitHub.

Overview

The OpenAI Assistants API enables the integration of AI assistants into custom applications. Each Assistant follows specific instructions and utilizes models, tools, and knowledge bases to answer user queries. Presently, the Assistants API offers support for three tool categories: Code Interpreter, Information Retrieval, and Function Execution.

Generally, integrating the Assistants API involves these steps:

- Define an Assistant within the API, customizing its instructions and selecting a model. Optionally, activate tools such as the Code Interpreter, Retrieval, and Function Calling.

- Initiate a Thread for each user conversation.

- Append Messages to the Thread in response to user inquiries.

- Execute the Assistant on the Thread to generate responses, which automatically engages the appropriate tools.

- Retrieve the Assistant's response from the Thread.

- Repeat steps 3-5 as needed.

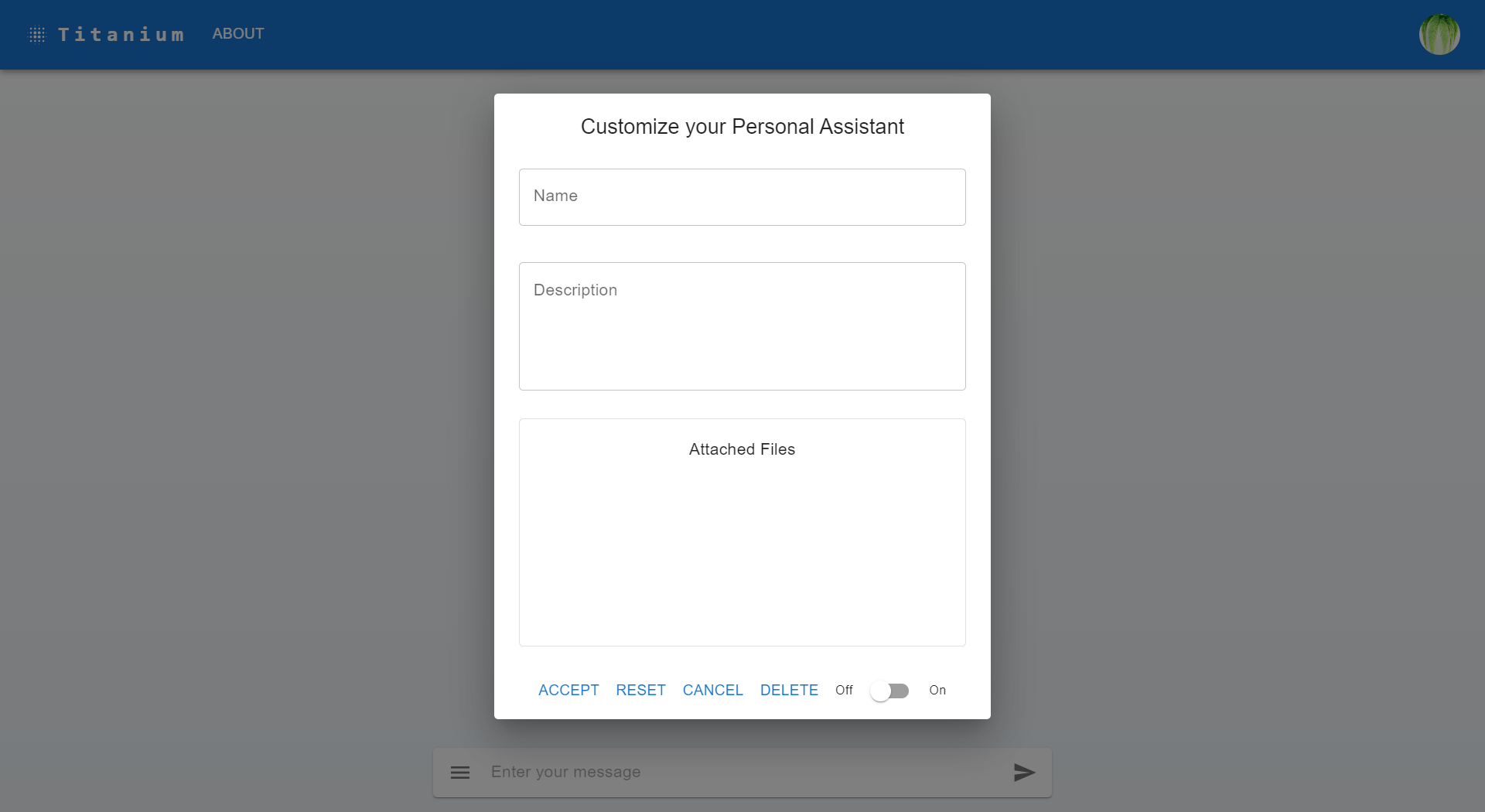

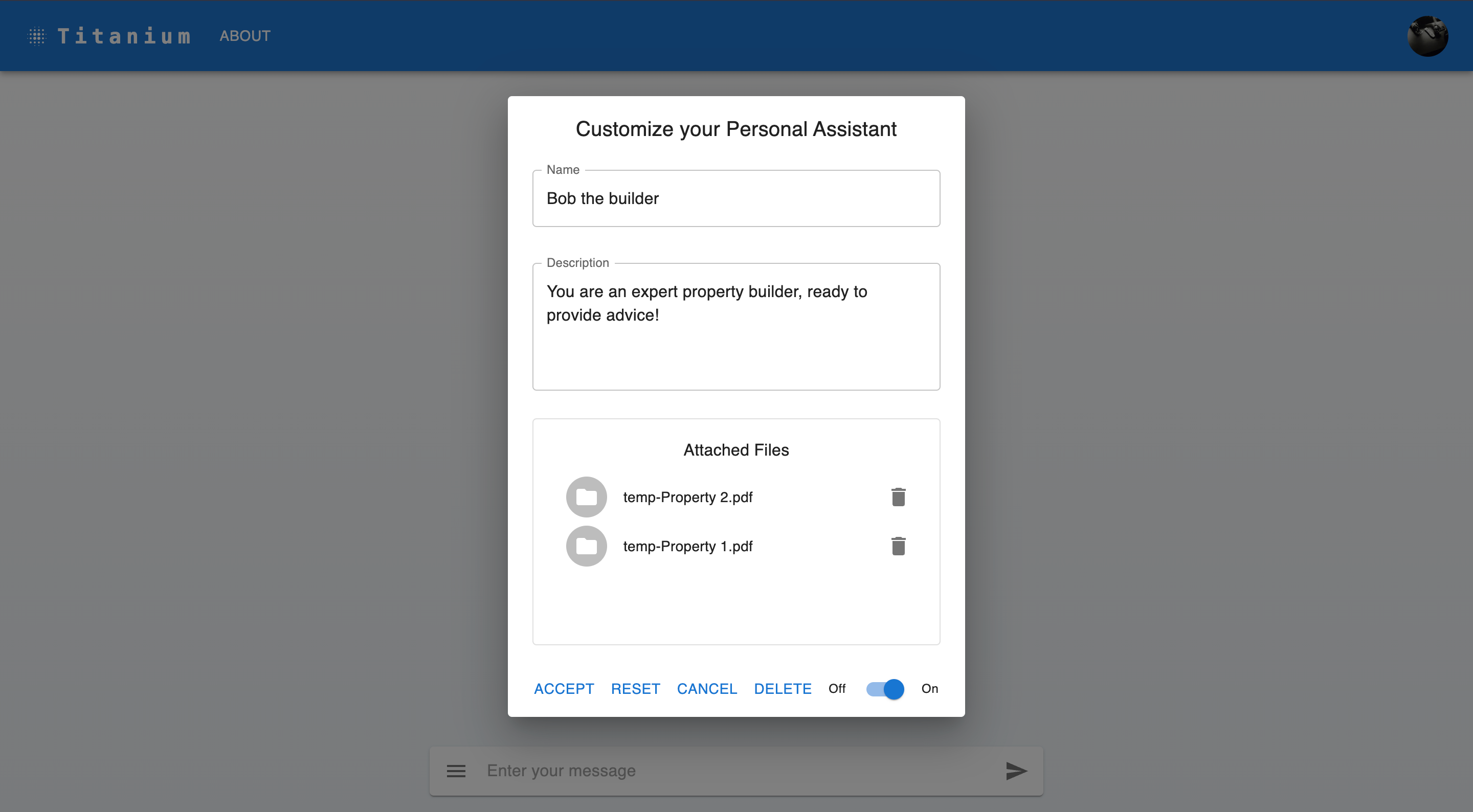

Our goal is to create a customized configuration component for the user Assistant. This component will allow the user to configure the Assistant's name and description. When the assistant is enabled, the user will be able to interact with it via the chat input component, which will also allow the user to upload files to the Assistant. When the Assistant is disabled, interacting with the chat input component will switch to the standard streaming chat experience dynamically. The user will also be able to delete all Assistant related data/files.

Do keep in mind that the Assistants API is in beta and is subject to change. For more information, check out the OpenAI Assistants API documentation.

Prerequisites

Before we dive in, make sure you have the following:

- A basic understanding of React and Material-UI

- Node.js and npm installed in your environment.

- A React project set up, Ideally using Next.js. Keep in mind that I'll be using Titanium, which is a template that already has a lots of basic functionality set up for building an AI Assistant. You can use it as a starting point for your own project, or you can just follow along and copy/paste the code snippets you need.

Step 1: Enhancing the Authentication

Although auth was not part of the scope for this series, I decided to add it anyway, because it's a good practice to have authentication in place for any application that deals with user data. This step deals with extending and refactoring the existing authentication implementation to support the new functionality.

Extend the api/auth/[...nextauth]/route.ts auth route

The route is extended with a custom CredentialsProvider that allows users to sign in as a guest. This is useful for testing purposes, as it allows you to sign in as a guest and test the Assistant without having to sign in with a real account.

import NextAuth, {

Account,

Profile,

SessionStrategy,

Session,

User,

} from 'next-auth';

import type { NextAuthOptions } from 'next-auth';

import { MongoDBAdapter } from '@auth/mongodb-adapter';

import { JWT } from 'next-auth/jwt';

import { AdapterUser } from 'next-auth/adapters';

import GitHubProvider from 'next-auth/providers/github';

import GoogleProvider from 'next-auth/providers/google';

import clientPromise from '../../../lib/client/mongodb';

import {

uniqueNamesGenerator,

Config,

adjectives,

colors,

starWars,

} from 'unique-names-generator';

import CredentialsProvider from 'next-auth/providers/credentials';

import { randomUUID } from 'crypto';

import Debug from 'debug';

const debug = Debug('nextjs:api:auth');

interface CustomUser extends User {

provider?: string;

}

interface CustomSession extends Session {

token_provider?: string;

}

const createAnonymousUser = (): User => {

const customConfig: Config = {

dictionaries: [adjectives, colors, starWars],

separator: '-',

length: 3,

style: 'capital',

};

const unique_handle: string = uniqueNamesGenerator(customConfig).replaceAll(

' ',

''

);

const unique_realname: string = unique_handle.split('-').slice(1).join(' ');

const unique_uuid: string = randomUUID();

return {

id: unique_uuid,

name: unique_realname,

email: `${unique_handle.toLowerCase()}@titanium-guest.com`,

image: '',

};

};

const providers = [

GitHubProvider({

clientId: process.env.GITHUB_ID as string,

clientSecret: process.env.GITHUB_SECRET as string,

}),

GoogleProvider({

clientId: process.env.GOOGLE_ID as string,

clientSecret: process.env.GOOGLE_SECRET as string,

}),

CredentialsProvider({

name: 'a Guest Account',

credentials: {},

async authorize(credentials, req) {

const user = createAnonymousUser();

// Get the MongoDB client and database

const client = await clientPromise;

const db = client.db();

// Check if user already exists

const existingUser = await db

.collection('users')

.findOne({ email: user.email });

if (!existingUser) {

// Save the new user if not exists

await db.collection('users').insertOne(user);

}

return user;

},

}),

];

const options: NextAuthOptions = {

providers,

adapter: MongoDBAdapter(clientPromise),

callbacks: {

async jwt({

token,

account,

profile,

}: {

token: JWT;

account: Account | null;

profile?: Profile;

}): Promise<JWT> {

if (account?.expires_at && account?.type === 'oauth') {

token.access_token = account.access_token;

token.expires_at = account.expires_at;

token.refresh_token = account.refresh_token;

token.refresh_token_expires_in = account.refresh_token_expires_in;

token.provider = 'github';

}

if (!token.provider) token.provider = 'Titanium';

return token;

},

async session({

session,

token,

user,

}: {

session: CustomSession;

token: JWT;

user: AdapterUser;

}): Promise<Session> {

if (token.provider) {

session.token_provider = token.provider as string;

}

return session;

},

},

events: {

async signIn({

user,

account,

profile,

}: {

user: CustomUser;

account: Account | null;

profile?: Profile;

}): Promise<void> {

debug(

`signIn of ${user.name} from ${user?.provider ?? account?.provider}`

);

},

async signOut({

session,

token,

}: {

session: Session;

token: JWT;

}): Promise<void> {

debug(`signOut of ${token.name} from ${token.provider}`);

},

},

session: {

strategy: 'jwt' as SessionStrategy,

},

};

const handler = NextAuth(options);

export { handler as GET, handler as POST };

Update the User model

The User model has been updated to include additional fields, which will be used to store and retrieve the Assistant configuration and files.

interface IUser {

email: string;

name: string;

description: string;

assistantId?: string;

threadId?: string;

isAssistantEnabled: boolean;

}

Step 2: Creating new UI Components

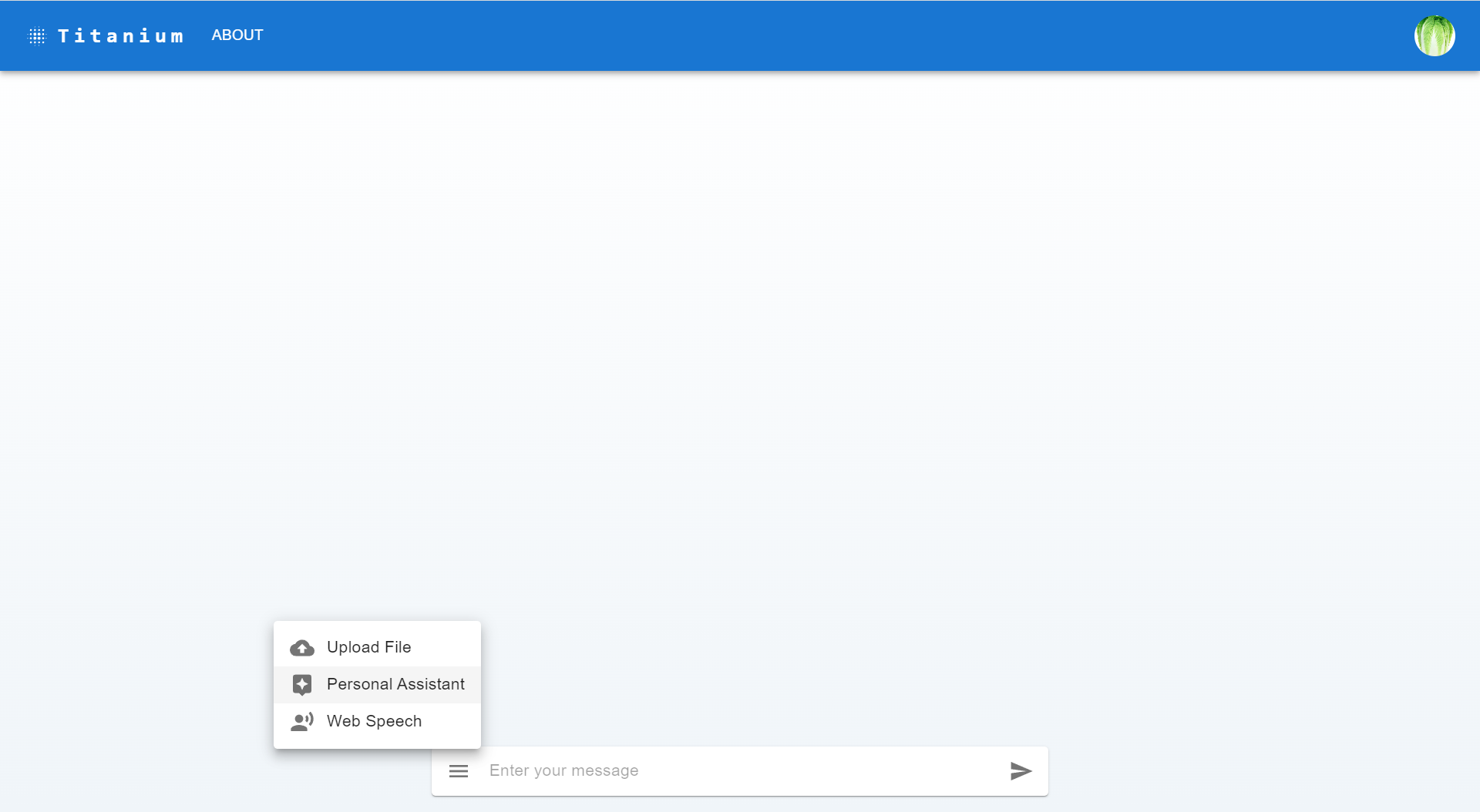

In this step, we'll be creating the new UI components needed to support the new functionality. This includes a new AssistantDialog component, which will be used to display the Assistant configuration dialog. We'll also be updating the CustomizedInputBase component, to support the new functionality.

Update the CustomizedInputBase component

You may notice from the code below that the CustomizedInputBase component has changed significantly, with new functions and state variables needed to support new functionality for the AssistantDialog component. The CustomizedInputBase component is used to display the Assistant menu and allow the user to interact with the Assistant, when it's enabled. When the Assistant is disabled, the user will still be able to interact with the chat input component, which will switch to the standard streaming chat dynamically, offering a seamless experience.

import React, { useState, useRef, useEffect } from 'react';

import Paper from '@mui/material/Paper';

import InputBase from '@mui/material/InputBase';

import IconButton from '@mui/material/IconButton';

import MenuIcon from '@mui/icons-material/Menu';

import SendIcon from '@mui/icons-material/Send';

import Menu from '@mui/material/Menu';

import MenuItem from '@mui/material/MenuItem';

import ListItemIcon from '@mui/material/ListItemIcon';

import FileUploadIcon from '@mui/icons-material/CloudUpload';

import SpeechIcon from '@mui/icons-material/RecordVoiceOver';

import AssistantIcon from '@mui/icons-material/Assistant';

import { useTheme } from '@mui/material/styles';

import useMediaQuery from '@mui/material/useMediaQuery';

import AssistantDialog from '../Assistant/AssistantDialog';

import { retrieveAssistant } from '@/app/services/assistantService';

import { useSession } from 'next-auth/react';

const CustomizedInputBase = ({

setIsLoading,

onSendMessage,

isAssistantEnabled,

setIsAssistantEnabled,

}: {

setIsLoading: React.Dispatch<React.SetStateAction<boolean>>;

onSendMessage: (message: string) => void;

isAssistantEnabled: boolean;

setIsAssistantEnabled: (isAssistantEnabled: boolean) => void;

}) => {

const { data: session } = useSession();

const theme = useTheme();

const isSmallScreen = useMediaQuery(theme.breakpoints.down('sm'));

const [anchorEl, setAnchorEl] = useState<null | HTMLElement>(null);

const [inputValue, setInputValue] = useState('');

const fileInputRef = useRef<HTMLInputElement>(null);

const [isAssistantDialogOpen, setIsAssistantDialogOpen] = useState(false);

const [name, setName] = useState<string>('');

const [description, setDescription] = useState<string>('');

const files = useRef<{ name: string; id: string; assistandId: string }[]>([]);

useEffect(() => {

const prefetchAssistantData = async () => {

if (session) {

try {

setIsLoading(true);

const userEmail = session.user?.email as string;

const response = await retrieveAssistant({ userEmail });

if (response.assistant) {

setName(response.assistant.name);

setDescription(response.assistant.instructions);

setIsAssistantEnabled(response.isAssistantEnabled);

files.current = response.fileList;

}

} catch (error) {

console.error('Error prefetching assistant:', error);

} finally {

setIsLoading(false);

}

}

};

prefetchAssistantData();

}, [session, setIsAssistantEnabled, setIsLoading]);

const handleKeyDown = (event: React.KeyboardEvent<HTMLFormElement>) => {

if (event.key === 'Enter') {

event.preventDefault();

if (inputValue.trim()) {

onSendMessage(inputValue);

setInputValue('');

}

}

};

const handleInputChange = (event: React.ChangeEvent<HTMLInputElement>) => {

setInputValue(event.target.value);

};

const handleSendClick = () => {

if (inputValue.trim()) {

onSendMessage(inputValue);

setInputValue('');

}

};

const handleMenuOpen = (event: React.MouseEvent<HTMLElement>) => {

setAnchorEl(event.currentTarget);

};

const handleMenuClose = () => {

setAnchorEl(null);

};

const handleUploadClick = () => {

fileInputRef.current?.click();

handleMenuClose();

};

const handleAssistantsClick = async () => {

setIsAssistantDialogOpen(true);

handleMenuClose();

};

const handleFileSelect = async (

event: React.ChangeEvent<HTMLInputElement>

) => {

const file = event.target.files?.[0];

if (file) {

const formData = new FormData();

formData.append('file', file);

formData.append('userEmail', session?.user?.email as string);

try {

setIsLoading(true);

const fileUploadResponse = await fetch('/api/upload', {

method: 'POST',

body: formData,

});

if (!fileUploadResponse.ok || fileUploadResponse.status !== 200) {

throw new Error(`HTTP error! Status: ${fileUploadResponse.status}`);

}

const retrieveAssistantResponse = await retrieveAssistant({

userEmail: session?.user?.email as string,

});

if (retrieveAssistantResponse.assistant) {

files.current = retrieveAssistantResponse.fileList;

}

console.log('File uploaded successfully', fileUploadResponse);

} catch (error) {

console.error('Failed to upload file:', error);

} finally {

setIsLoading(false);

}

}

};

return (

<>

<Paper

component="form"

sx={{

p: '2px 4px',

display: 'flex',

alignItems: 'center',

width: isSmallScreen ? '100%' : 600,

}}

onKeyDown={handleKeyDown}

>

<IconButton

sx={{ p: '10px' }}

aria-label="menu"

onClick={handleMenuOpen}

>

<MenuIcon />

</IconButton>

<Menu

anchorEl={anchorEl}

open={Boolean(anchorEl)}

onClose={handleMenuClose}

anchorOrigin={{

vertical: 'top',

horizontal: 'right',

}}

transformOrigin={{

vertical: 'bottom',

horizontal: 'right',

}}

>

<MenuItem onClick={handleUploadClick}>

<ListItemIcon>

<FileUploadIcon />

</ListItemIcon>

Upload File

</MenuItem>

<MenuItem onClick={handleAssistantsClick}>

<ListItemIcon>

<AssistantIcon />

</ListItemIcon>

Personal Assistant

</MenuItem>

<MenuItem onClick={handleMenuClose}>

<ListItemIcon>

<SpeechIcon />

</ListItemIcon>

Web Speech

</MenuItem>

</Menu>

<InputBase

sx={{ ml: 1, flex: 1 }}

placeholder="Enter your message"

value={inputValue}

onChange={handleInputChange}

/>

<IconButton

type="button"

sx={{ p: '10px' }}

aria-label="send"

onClick={handleSendClick}

>

<SendIcon />

</IconButton>

</Paper>

<input

type="file"

ref={fileInputRef}

style={{ display: 'none' }}

onChange={handleFileSelect}

/>

<AssistantDialog

open={isAssistantDialogOpen}

onClose={() => setIsAssistantDialogOpen(false)}

name={name}

setName={setName}

description={description}

setDescription={setDescription}

isAssistantEnabled={isAssistantEnabled}

setIsAssistantEnabled={setIsAssistantEnabled}

setIsLoading={setIsLoading}

files={files.current}

/>

</>

);

};

export default CustomizedInputBase;

Create the AssistantDialog component

This component will be used to display the Assistant configuration dialog. When changes are made to the Assistant configuration, the user will be able to save them by clicking on the Accept button. The Assistant configuration can be reset by clicking on the Reset button and the dialog can be cancelled by clicking on the Cancel button. If a user desires, they can delete all Assistant related data/files. Finally, the user can enable/disable the Assistant by clicking on the switch.

import React, { useState } from 'react';

import Dialog from '@mui/material/Dialog';

import DialogActions from '@mui/material/DialogActions';

import DialogContent from '@mui/material/DialogContent';

import DialogContentText from '@mui/material/DialogContentText';

import DialogTitle from '@mui/material/DialogTitle';

import Button from '@mui/material/Button';

import TextField from '@mui/material/TextField';

import FormControl from '@mui/material/FormControl';

import Switch from '@mui/material/Switch';

import Box from '@mui/material/Box';

import Typography from '@mui/material/Typography';

import Paper from '@mui/material/Paper';

import {

Avatar,

List,

ListItem,

ListItemAvatar,

ListItemText,

} from '@mui/material';

import Grid from '@mui/material/Grid';

import IconButton from '@mui/material/IconButton';

import DeleteIcon from '@mui/icons-material/Delete';

import FolderIcon from '@mui/icons-material/Folder';

import {

updateAssistant,

deleteAssistantFile,

deleteAssistant,

} from '@/app/services/assistantService';

import { useSession } from 'next-auth/react';

interface AssistantDialogProps {

open: boolean;

onClose: () => void;

name: string;

setName: (name: string) => void;

description: string;

setDescription: (description: string) => void;

isAssistantEnabled: boolean;

setIsAssistantEnabled: (isAssistantEnabled: boolean) => void;

onToggleAssistant?: (isAssistantEnabled: boolean) => void;

onReset?: () => void;

setIsLoading: React.Dispatch<React.SetStateAction<boolean>>;

files: { name: string; id: string; assistandId: string }[];

}

const AssistantDialog: React.FC<AssistantDialogProps> = ({

open,

onClose,

name,

setName,

description,

setDescription,

isAssistantEnabled,

setIsAssistantEnabled,

onToggleAssistant,

onReset,

setIsLoading,

files,

}) => {

const { data: session } = useSession();

const [error, setError] = useState<{ name: boolean; description: boolean }>({

name: false,

description: false,

});

const [isConfirmDialogOpen, setIsConfirmDialogOpen] = useState(false);

const handleAccept = async () => {

let hasError = false;

if (!name) {

setError((prev) => ({ ...prev, name: true }));

hasError = true;

}

if (!description) {

setError((prev) => ({ ...prev, description: true }));

hasError = true;

}

if (hasError) return;

try {

onClose();

setIsLoading(true);

if (session) {

const userEmail = session.user?.email as string;

await updateAssistant({

name,

description,

isAssistantEnabled,

userEmail,

});

console.log('Assistant updated successfully');

} else {

throw new Error('No session found');

}

} catch (error) {

console.error('Error updating assistant:', error);

} finally {

setIsLoading(false);

}

};

const handleToggle = (event: React.ChangeEvent<HTMLInputElement>) => {

setIsAssistantEnabled(event.target.checked);

if (onToggleAssistant) {

onToggleAssistant(event.target.checked);

}

};

const handleReset = () => {

setName('');

setDescription('');

setIsAssistantEnabled(false);

setError({ name: false, description: false });

if (onReset) {

onReset();

}

};

const handleFileDelete = async (file: any) => {

try {

setIsLoading(true);

console.log('Deleting file from the assistant:', file);

let response = await deleteAssistantFile({ file });

console.log('File successfully deleted from the assistant:', response);

files.splice(files.indexOf(file), 1);

} catch (error) {

console.error('Failed to remove file from the assistant:', error);

} finally {

setIsLoading(false);

}

};

const handleAssistantDelete = () => {

setIsConfirmDialogOpen(true);

};

const performAssistantDelete = async () => {

setIsConfirmDialogOpen(false);

const userEmail = session?.user?.email as string;

try {

setIsLoading(true);

let response = await deleteAssistant({ userEmail });

console.log('Assistant deleted successfully', response);

files.splice(0, files.length);

handleReset();

onClose();

} catch (error) {

console.error('Error deleting assistant:', error);

} finally {

setIsLoading(false);

}

};

const ConfirmationDialog = () => (

<Dialog

open={isConfirmDialogOpen}

onClose={() => setIsConfirmDialogOpen(false)}

>

<DialogTitle>{'Confirm Delete'}</DialogTitle>

<DialogContent>

<DialogContentText>

Are you sure you want to delete your Assistant? All associated

Threads, Messages and Files will also be deleted.

</DialogContentText>

</DialogContent>

<DialogActions>

<Button onClick={() => setIsConfirmDialogOpen(false)} color="primary">

Cancel

</Button>

<Button onClick={performAssistantDelete} color="secondary">

Delete

</Button>

</DialogActions>

</Dialog>

);

return (

<Dialog open={open} onClose={onClose}>

<DialogTitle style={{ textAlign: 'center' }}>

Customize your Personal Assistant

</DialogTitle>

<DialogContent style={{ paddingBottom: 8 }}>

<FormControl

fullWidth

margin="dense"

error={error.name}

variant="outlined"

>

<TextField

autoFocus

label="Name"

fullWidth

variant="outlined"

value={name || ''}

onChange={(e) => setName(e.target.value)}

error={error.name}

helperText={error.name ? 'Name is required' : ' '}

/>

</FormControl>

<FormControl

fullWidth

margin="dense"

error={error.description}

variant="outlined"

>

<TextField

label="Description"

fullWidth

multiline

rows={4}

variant="outlined"

value={description || ''}

onChange={(e) => setDescription(e.target.value)}

error={error.description}

helperText={error.description ? 'Description is required' : ' '}

/>

</FormControl>

<Grid item xs={12} md={6}>

<Paper variant="outlined" sx={{ padding: 2, marginBottom: 2 }}>

<Typography variant="subtitle1" sx={{ textAlign: 'center' }}>

Attached Files

</Typography>

<Box sx={{ height: '160px', overflowY: 'auto' }}>

<List dense>

{files.map((file) => (

<ListItem

key={file.id}

secondaryAction={

<IconButton

edge="end"

aria-label="delete"

onClick={() => handleFileDelete(file)}

>

<DeleteIcon />

</IconButton>

}

>

<ListItemAvatar>

<Avatar>

<FolderIcon />

</Avatar>

</ListItemAvatar>

<ListItemText primary={file.name} />

</ListItem>

))}

</List>

</Box>

</Paper>

</Grid>

</DialogContent>

<DialogActions style={{ paddingTop: 0 }}>

<Box

display="flex"

justifyContent="center"

alignItems="center"

width="100%"

>

<Button onClick={handleAccept}>Accept</Button>

<Button onClick={handleReset}>Reset</Button>

<Button onClick={onClose}>Cancel</Button>

<Button onClick={handleAssistantDelete}>Delete</Button>

<Typography variant="caption" sx={{ mx: 1 }}>

Off

</Typography>

<Switch

checked={isAssistantEnabled}

onChange={handleToggle}

name="activeAssistant"

/>

<Typography variant="caption" sx={{ mx: 1 }}>

On

</Typography>

</Box>

</DialogActions>

<ConfirmationDialog />

</Dialog>

);

};

export default AssistantDialog;

Step 3: New API Routes

For this step, we'll need to create 4 new API routes:

/api/assistant/retrieve- This route will be used to retrieve the Assistant configuration and files./api/assistant/update- This route will be used to create/update the Assistant configuration./api/assistant/delete-file- This route will be used to delete a specific file from the Assistant, from the list of files in the UI component./api/assistant/delete- This route will be used to delete the Assistant and related Threads, Messages and files.

The /api/assistant/retrieve route

This route will be used to retrieve the Assistant configuration and files. This route ensures that the user will always see the latest Assistant configuration and will trigger when a session is created, as well as when a new file is uploaded to the Assistant.

import { NextRequest, NextResponse } from 'next/server';

import clientPromise from '@/app/lib/client/mongodb';

import OpenAI from 'openai';

const openai = new OpenAI();

export async function GET(req: NextRequest) {

try {

const client = await clientPromise;

const db = client.db();

// Retrieve the userEmail from the request headers

const userEmail = req.headers.get('userEmail');

if (!userEmail) {

return NextResponse.json('userEmail header is required', {

status: 400,

});

}

// Retrieve the user from the database

const usersCollection = db.collection<IUser>('users');

const user = await usersCollection.findOne({ email: userEmail });

let assistant,

thread,

fileList,

filesWithNames: { id: string; name: string; assistantId: string }[] = [];

if (!user) {

return NextResponse.json('User not found', { status: 404 });

}

// If the user has an assistantId, retrieve the assistant from OpenAI

if (user.assistantId) {

assistant = await openai.beta.assistants.retrieve(user.assistantId);

thread = await openai.beta.threads.retrieve(user.threadId as string);

fileList = await openai.beta.assistants.files.list(user.assistantId);

// Retrieve each file's metadata and construct a new array

if (fileList?.data) {

for (const fileObject of fileList.data) {

const file = await openai.files.retrieve(fileObject.id);

filesWithNames.push({

id: fileObject.id,

name: file.filename,

assistantId: user.assistantId,

});

}

}

// Return a success response

return NextResponse.json(

{

message: 'Assistant updated',

assistant: assistant,

threadId: thread?.id,

fileList: filesWithNames,

isAssistantEnabled: user.isAssistantEnabled,

},

{ status: 200 }

);

}

// Return a success response

return NextResponse.json(

{

message: 'No assistant found',

},

{ status: 200 }

);

} catch (error: any) {

// Return an error response

return NextResponse.json(error.message, { status: 500 });

}

}

The /api/assistant/update route

This route will be used to update the Assistant configuration and will trigger when the user clicks on the Accept button in the Assistant configuration dialog.

import { NextRequest, NextResponse } from 'next/server';

import clientPromise from '../../../lib/client/mongodb';

import OpenAI from 'openai';

const openai = new OpenAI();

export async function POST(req: NextRequest) {

try {

const client = await clientPromise;

const db = client.db();

const { userEmail, name, description, isAssistantEnabled } = await req.json();

if (!userEmail || !name || !description || isAssistantEnabled === undefined) {

return NextResponse.json('Missing required parameters', { status: 400 });

}

const usersCollection = db.collection<IUser>('users');

const user = await usersCollection.findOne({ email: userEmail });

if (!user) {

return NextResponse.json('User not found', { status: 404 });

}

let assistant, thread;

if (!user.assistantId) {

assistant = await openai.beta.assistants.create({

instructions: description,

name: name,

tools: [{ type: 'retrieval' }, { type: 'code_interpreter' }],

model: process.env.OPENAI_API_MODEL as string,

});

thread = await openai.beta.threads.create();

let assistantId = assistant.id;

let threadId = thread.id;

await usersCollection.updateOne(

{ email: userEmail },

{ $set: { assistantId, threadId, isAssistantEnabled } }

);

} else {

assistant = await openai.beta.assistants.update(user.assistantId, {

instructions: description,

name: name,

tools: [{ type: 'retrieval' }, { type: 'code_interpreter' }],

model: process.env.OPENAI_API_MODEL as string,

file_ids: [],

});

let threadId = user.threadId;

thread = await openai.beta.threads.retrieve(threadId as string);

await usersCollection.updateOne(

{ email: userEmail },

{ $set: { isAssistantEnabled } }

);

}

return NextResponse.json(

{

message: 'Assistant updated',

assistantId: assistant.id,

threadId: thread.id,

isAssistantEnabled: user.isAssistantEnabled,

},

{ status: 200 }

);

} catch (error: any) {

return NextResponse.json(error.message, { status: 500 });

}

}

The /api/assistant/delete-file route

This route will be used to delete a specific file from the Assistant, from the list of files in the UI component. It will trigger when the user clicks on the delete icon next to a file in the list of files in the UI component.

import { NextRequest, NextResponse } from 'next/server';

import OpenAI from 'openai';

const openai = new OpenAI();

export async function POST(req: NextRequest) {

const { file } = await req.json();

try {

// Delete the file from the assistant

const response = await openai.beta.assistants.files.del(

file.assistantId,

file.id

);

// Delete the file from openai.files

await openai.files.del(file.id);

return NextResponse.json(

{

message: 'File deleted',

response: response,

},

{ status: 200 }

);

} catch (error) {

console.error('Assistant file deletion unsuccessful:', error);

return NextResponse.json(

{

message: 'Assistant file deletion unsuccessful',

error: error,

},

{ status: 500 }

);

}

}

The /api/assistant/delete route

This route will be used to delete the Assistant and related Threads, Messages and files and will trigger when the user clicks on the Delete button in the Assistant configuration dialog.

import { NextRequest, NextResponse } from 'next/server';

import clientPromise from '@/app/lib/client/mongodb';

import OpenAI from 'openai';

const openai = new OpenAI();

export async function POST(req: NextRequest) {

const { userEmail } = await req.json();

try {

const client = await clientPromise;

const db = client.db();

const usersCollection = db.collection<IUser>('users');

const user = await usersCollection.findOne({ email: userEmail });

if (!user) {

return NextResponse.json('User not found', { status: 404 });

}

if (user.assistantId) {

const assistant = await openai.beta.assistants.retrieve(user.assistantId);

const assistantFiles = await openai.beta.assistants.files.list(

assistant.id

);

assistantFiles.data.forEach(async (file) => {

await openai.beta.assistants.files.del(assistant.id, file.id);

await openai.files.del(file.id);

});

let assistantDeletionResponse = await openai.beta.assistants.del(

assistant.id

);

await usersCollection.updateOne(

{ email: userEmail },

{

$set: {

assistantId: null,

threadId: null,

isAssistantEnabled: false,

},

}

);

return NextResponse.json(

{

message: 'Assistant deleted (With all associated files)',

response: assistantDeletionResponse,

},

{ status: 200 }

);

}

} catch (error) {

console.error('Assistant deletion unsuccessful:', error);

return NextResponse.json(

{

message: 'Assistant deletion unsuccessful',

error: error,

},

{ status: 500 }

);

}

}

Step 4: Honorable mentions

Along the way I've had to make some changes to the existing code, to improve readability and maintainability.

Creating a Services file

Creating a services file is a good practice, as it allows you to separate the API calls from the UI components. This makes it easier to maintain and test your code. I've created a new file called assistantService.ts in the app/services folder, which contains all the API calls related to the Assistant. In the future, I'll be adding more API calls to this file, as I continue to build out the AI Assistant functionality.

import axios from 'axios';

interface AssistantUpdateData {

name: string;

description: string;

isAssistantEnabled: boolean;

userEmail: string;

}

interface AssistantRetrieveData {

userEmail: string;

}

const updateAssistant = async ({

name,

description,

isAssistantEnabled,

userEmail,

}: AssistantUpdateData): Promise<any> => {

try {

const response = await axios.post('/api/assistant/update', {

name,

description,

isAssistantEnabled,

userEmail,

});

return response.data;

} catch (error) {

console.error('Unexpected error:', error);

throw error;

}

};

const retrieveAssistant = async ({

userEmail,

}: AssistantRetrieveData): Promise<any> => {

try {

// Send a GET request to the /api/assistant/retrieve endpoint

const response = await axios.get(`/api/assistant/retrieve/`, {

headers: { userEmail: userEmail },

});

return response.data;

} catch (error) {

console.error('Unexpected error:', error);

throw error;

}

};

const deleteAssistantFile = async ({

file,

}: {

file: string;

}): Promise<any> => {

try {

const response = await axios.post('/api/assistant/delete-file', {

file,

});

return response.data;

} catch (error) {

console.error('Unexpected error:', error);

throw error;

}

};

export { updateAssistant, retrieveAssistant, deleteAssistantFile };

Improving the prefetch functionality in Chat.tsx

I've also made some changes to the Chat.tsx component, to improve the prefetch functionality. This ensures that the user will always see the latest Assistant configuration and will trigger when a session is created, as well as when a new file is uploaded to the Assistant.

useEffect(() => {

const prefetchAssistantData = async () => {

if (session) {

try {

setIsLoading(true);

const userEmail = session.user?.email as string;

const response = await retrieveAssistant({ userEmail });

if (response.assistant) {

setName(response.assistant.name);

setDescription(response.assistant.instructions);

setIsAssistantEnabled(response.isAssistantEnabled);

files.current = response.fileList;

}

} catch (error) {

console.error('Error prefetching assistant:', error);

} finally {

setIsLoading(false);

}

}

};

prefetchAssistantData();

}, [session, setIsAssistantEnabled, setIsLoading]);

Conclusion and Next Steps

In this article, I've covered how to integrate multi-user Assistants with OpenAI API. This included building the UI components for the user Assistant, including some functionality to allow deletion of all Assistant related data/files and going over file uploads and how to handle them in the Assistant. I also covered how to extend and refactor the existing authentication implementation to support the new functionality. I'm concious that the code isn't perfect and there's lots of room for improvement, but I'm hoping that it will be a good starting point for anyone who wants to build their own AI Assistant.

When building this functionality, it's good to be aware of one key limitation of the OpenAI API; Using an Assistant currently does not support streaming chat. This means that the user will have to wait for the Assistant to respond, before they can see the message. Especially when the Assistant interacts with an uploaded file, responses can take a while, depending on the complexity of the file. Fret not, however as this has been aknowledged by the OpenAI team and they are working on introducing streaming for Assistants in the near future.

Feel free to check out Titanium, which already has a lots of basic functionality set up for building an AI Assistant. You can use it as a starting point for your own project, or you can just follow along and copy/paste the code snippets you need.

In the next article, I'm thinking of taking a step back, reflecting and doing a round of refactoring to improve the code, ensure coherence, consistency and make it more maintainable. After that, I'll probably work on integrating Vision and speech capabilities.

If you have any questions or comments, feel free to reach out to me on GitHub, LinkedIn, or via email.

See ya around and happy coding!