- Published on

Closing the AI Value Gap: Insights from Research

- Authors

- Name

- Athos Georgiou

The Value Gap

Something strange is happening in enterprise AI. According to McKinsey's 2025 State of AI survey, 88% of enterprises now report regular AI use, up from 78% a year ago. Generative AI adoption alone more than doubled between 2023 and 2024, jumping from 33% to 71%. Menlo Ventures' 2025 benchmark study found that enterprise GenAI spending hit 11.5 billion the year before, the fastest growth rate in software history.

Yet the returns aren't there:

Approximately 95% of generative AI pilots fail to achieve rapid revenue acceleration, with the vast majority stalling and delivering little to no measurable impact on profit and loss. That's not my opinion. That's from MIT research published in August 2025, based on 52 organizational interviews, 153 senior leader survey responses, and analysis of 300 public AI deployments.

And it's not an isolated finding:

- BCG's October 2024 study reported that 74% of companies struggle to achieve and scale value from their AI investments

- According to estimates cited by the RAND Corporation, the AI project failure rate exceeds 80%, twice the failure rate of non-AI technology projects

Organizations are buying the tools, running the pilots, and hiring the talent, but the transformation isn't materializing.

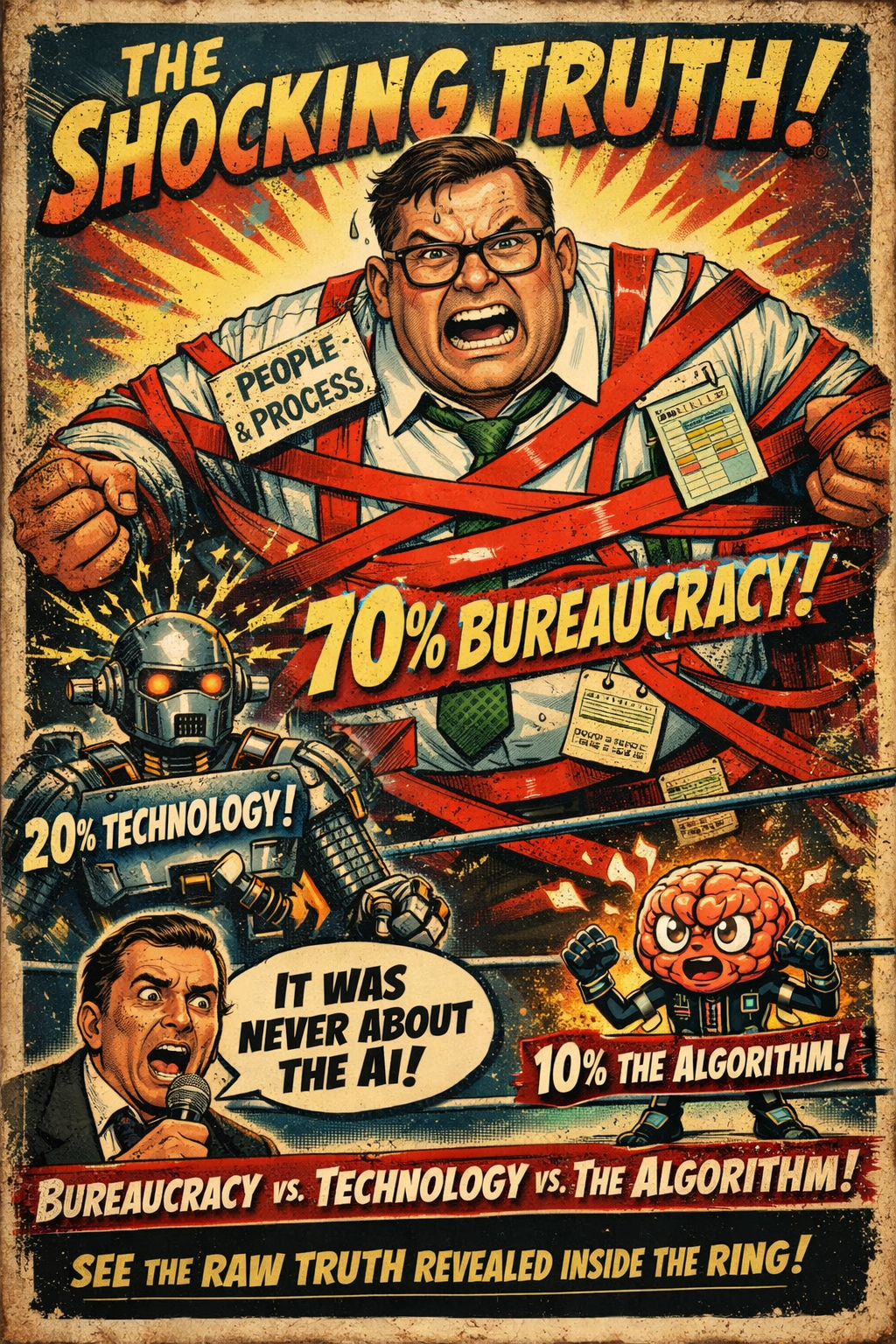

The 70-20-10 Breakdown

What struck me most:

When implementing AI initiatives, approximately 70% of challenges stem from people and process issues, 20% from technology problems, and only 10% from the AI algorithms themselves.

That's from BCG's 2024 AI research. The limiting factor isn't the technology. It's the organization.

This explains why organizations keep failing despite having access to the same sophisticated AI models. They're treating AI as a technology problem when it's fundamentally an organizational one.

Four Barriers to Closing the Gap

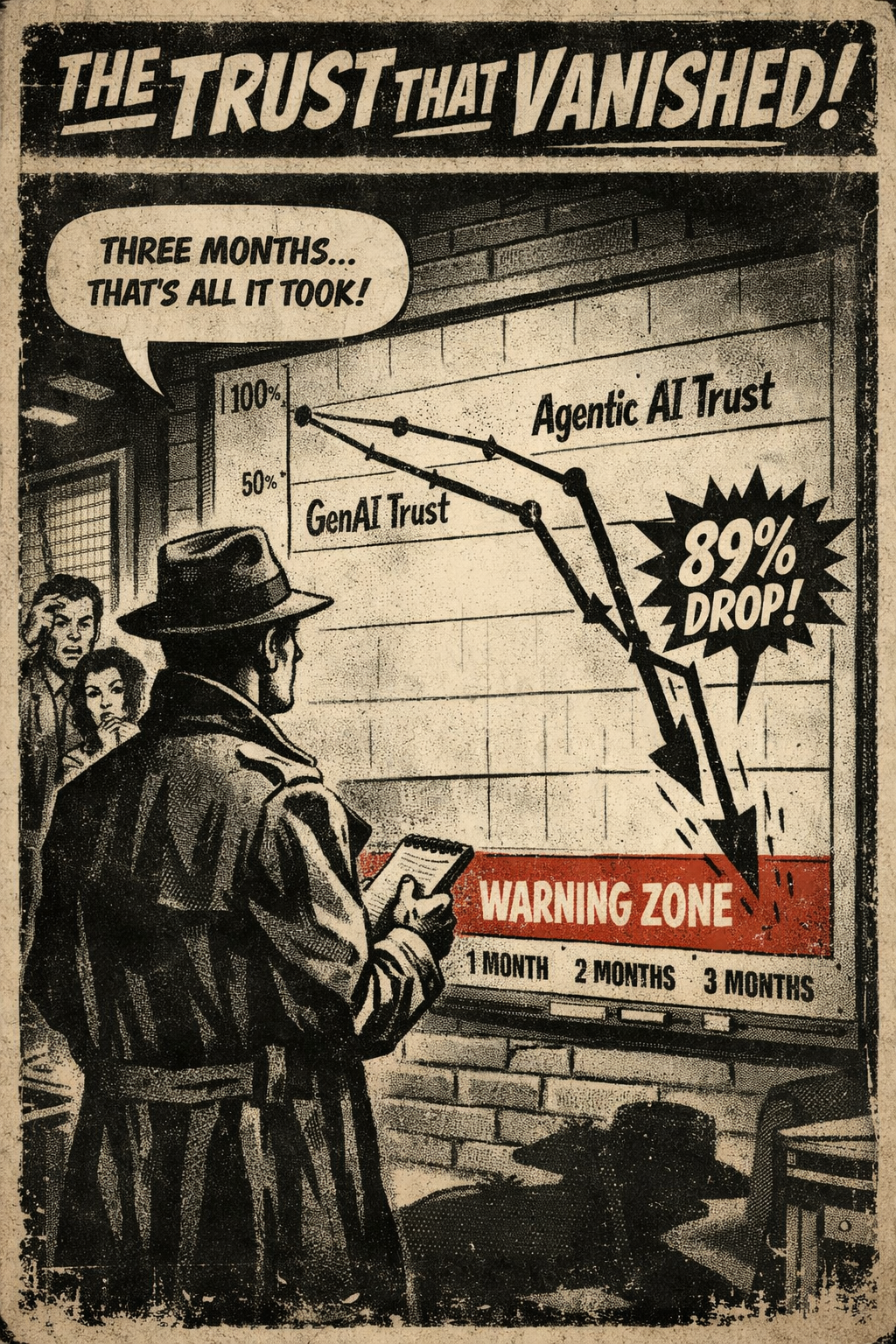

1. The Trust Deficit

Workplace trust numbers are alarming.

According to Deloitte's TrustID research, trust in company-provided generative AI among frontline workers fell 31% between May and July of 2025 alone. Even more striking, trust in agentic AI systems (those capable of autonomous action) dropped 89% during the same three-month period.

This isn't abstract concern. Research shows that workplace AI correlates with increased job insecurity, which leads to emotional exhaustion and reluctance to acquire new skills.

A 2025 Writer survey found that a majority of C-suite leaders say AI has caused internal divisions within their organizations.

The technology meant to enhance operations is creating internal friction.

Research points to a few drivers:

- The "black box" problem: McKinsey's 2024 survey found that explainability is the second-most commonly reported AI risk, yet only 17% are actively working to mitigate it

- Fear of displacement: The Human Resource Management Review conceptualizes AI resistance as a "three-dimensional concept embodied in employees' fears, inefficacies, and antipathies toward AI"

- Loss of autonomy: Employees are "growing uneasy with technology taking over decisions that were once theirs to make"

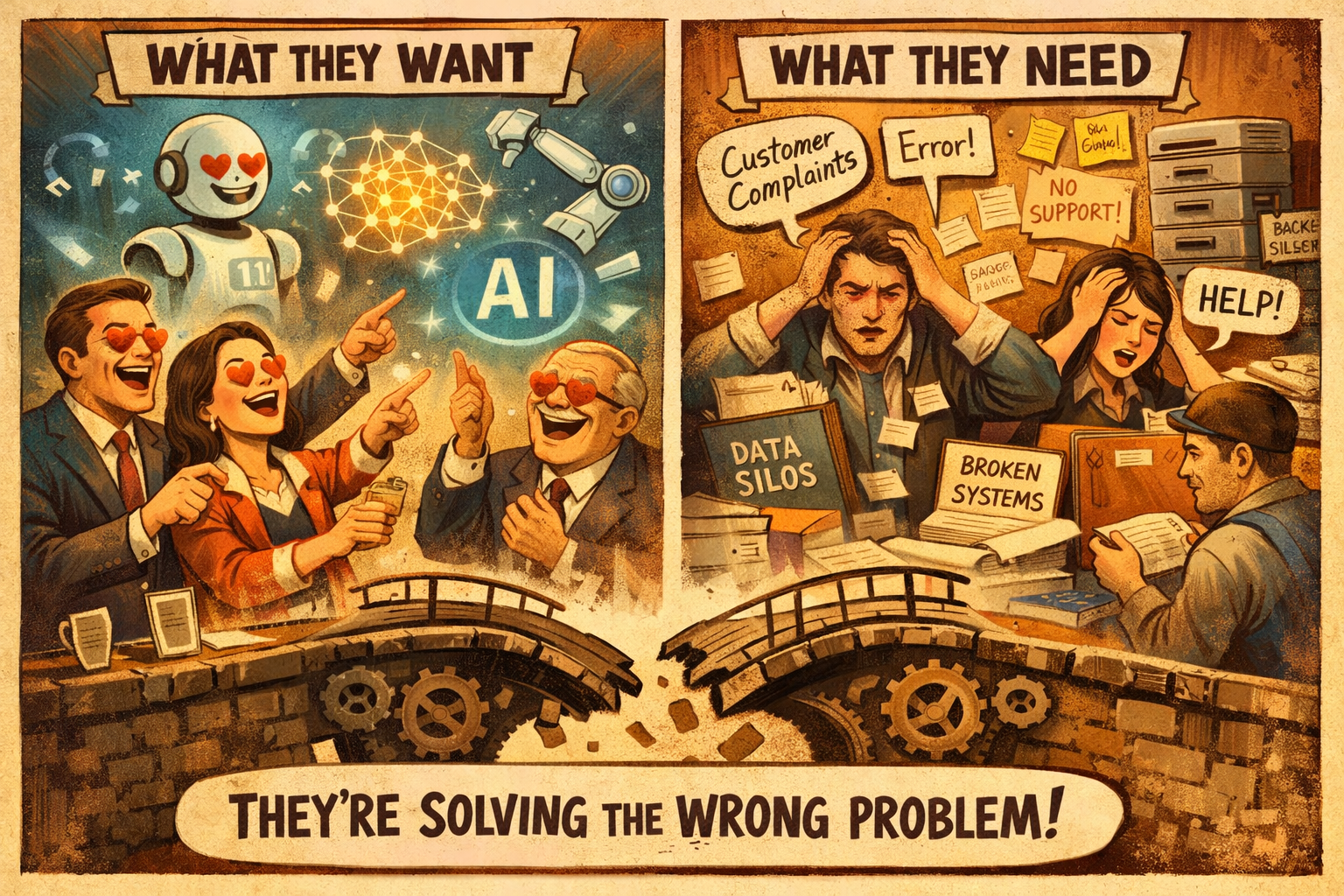

2. Strategic Misalignment

IBM's 2025 CEO study found that only 25% of AI initiatives have delivered expected ROI, and only 16% have scaled enterprise-wide. CEOs cite organizational silos, risk aversion, and expertise gaps as top barriers.

Organizations are investing without clear understanding of what success looks like.

The RAND Corporation's interviews with 65 experienced data scientists identified several root causes, including "focus on latest technology rather than solving real problems." Organizations chase AI because competitors are doing it, or because the technology is exciting, rather than because they've identified specific problems AI can solve.

This misalignment shows up in how AI gets used. The OECD's 2025 SME study found that among SMEs using generative AI, only 29% report using it for core business activities. The majority use it for peripheral tasks, a pattern that limits impact and makes ROI difficult to demonstrate.

3. Implementation Failures

RAND's research identified "misunderstanding (or miscommunicating) what problem needs to be solved" as the leading root cause of AI project failure. Organizations build and deploy AI models optimized for the wrong metrics because they never clearly defined what success means.

Data problems are endemic. 64% of organizations report data quality as their top data integrity challenge, according to Precisely and Drexel University's 2025 Data Integrity Trends report. Without clean, relevant, sufficient data, even the best algorithms produce unreliable results.

Perhaps most telling is MIT's finding on build-versus-buy decisions: vendor partnerships succeed about 67% of the time, while internal builds succeed only one-third as often.

Organizations consistently overestimate their internal capabilities while underestimating the complexity of AI implementation.

4. Governance Gaps

Organizations are deploying increasingly autonomous AI systems in an environment where no regulatory frameworks specific to agentic AI exist. Current rules address general AI safety, bias, privacy, and explainability, but gaps remain for systems that can act independently.

Nearly 60% of AI leaders surveyed by Deloitte cite integration with legacy systems and addressing risk and compliance concerns as their primary challenges in adopting agentic AI.

And measurement infrastructure is lacking. KPMG's Q3 2025 AI Pulse Survey found that 78% of leaders acknowledge traditional business metrics don't capture AI's full impact, meaning organizations often can't tell whether their investments are working.

Four Patterns from Successful Implementations

But the research also shows what works. Organizations that succeed with AI share common characteristics.

Strategy 1: Build Trust Through Transparency

Gartner predicts that by 2026, organizations that operationalize AI transparency, trust, and security will see a 50% improvement in adoption, business goals, and user acceptance.

Research published in Scientific Reports found that explainable AI improves technological acceptance through "uncertainty reduction." When people understand how a system works, they're more willing to use it and trust its outputs.

The Academy of Management Annals review of empirical research identifies specific trust-building factors:

- Tangibility: Can people see what the AI does?

- Transparency: Can they understand why?

- Reliability: Does it perform consistently?

- Immediacy: Does it respond in real-time?

Critically, research in the Asia Pacific Journal of Human Resources found that trustworthy leadership increases employees' AI trust and intention to adopt. Leaders who visibly use and endorse AI tools, while honestly acknowledging limitations, create conditions for broader organizational trust.

In practice: require AI systems to explain their outputs, put humans in the loop for high-stakes decisions, and have leadership model transparent usage.

Strategy 2: Invest in Change Management

Kyndryl's 2025 People Readiness Report found that while 95% of businesses invest in AI, only 14% have aligned their workforce, technology, and business goals. These "AI Pacesetters" are three times more likely than others to have a fully implemented change management strategy.

Academic research frames organizational readiness as requiring alignment across four dimensions:

- Psychological alignment (employee mindset toward AI)

- Cultural resonance (how AI fits organizational values)

- Structural competency (processes and governance)

- Technological fitness (infrastructure readiness)

Organizations that assess and address all four dimensions before deployment dramatically improve their odds.

The Human Resource Management Review research suggests that overcoming AI resistance requires facilitating "experiencing mistrust, existential questioning, and technological reflection" rather than suppressing these responses. Employees need space to work through their concerns, not mandates to adopt.

High-performing organizations don't just automate existing processes. They redesign workflows around AI capabilities. IBM's Race for ROI report found that 32% of organizations using AI are already redesigning value streams around AI rather than layering AI onto existing steps.

In practice: assess readiness across all four dimensions before deployment, allocate dedicated budget for change management, and redesign workflows rather than just automating existing ones.

Strategy 3: Demonstrate Value Systematically

Gartner's 2024 AI survey found that 49% cite difficulty demonstrating AI value as the primary obstacle to adoption. Traditional ROI calculations miss significant value. MIT found the biggest returns in back-office automation that eliminates outsourcing costs, but "these successes often appear as cost avoidance rather than revenue generation, making them invisible to traditional ROI calculations."

The answer seems to be multi-dimensional measurement.

A comprehensive framework includes:

- Efficiency metrics: Time saved, processes automated, tasks completed per employee

- Quality metrics: Error reduction, customer satisfaction improvements, decision accuracy

- Risk metrics: Compliance improvements, incident reduction

- Strategic metrics: New capability enablement, competitive positioning

Time horizons matter too. Measuring a strategic AI initiative by 90-day productivity gains misses the point.

In practice: establish baselines before deployment, measure across all four dimensions, and use different time horizons for different initiative types.

Strategy 4: Phase Implementation Strategically

The build-versus-partner numbers are stark: vendor partnerships succeed about 67% of the time, while internal builds succeed only one-third as often.

McKinsey describes the current state of enterprise AI as "extensive experimentation." High performers tend to follow a progression:

- Targeted pilots with clearly defined success criteria and limited scope

- Controlled scaling with continuous feedback and iteration

- Enterprise integration with workflow redesign

- Continuous optimization and expansion to new use cases

Starting with back-office processes makes sense. MIT found the biggest ROI in areas like eliminating business process outsourcing and cutting external agency costs. Less glamorous than customer-facing AI but more likely to demonstrate clear value.

In practice: favor vendor partnerships for initial implementations, start with bounded pilots in back-office processes, and scale only after demonstrated success.

The Short Version

- Adoption isn't translating to impact: 88% adoption but only 5% delivering measurable results, and 70% of the gap stems from people and process issues, not technology

- Trust is the foundation: Addressing the 31% drop in workplace AI trust requires transparency and leadership modeling

- Partnerships accelerate success: Vendor partnerships succeed ~67% of the time; internal builds succeed one-third as often

- Change management is underinvested: Only 14% of organizations have successfully aligned workforce, technology, and business goals for AI

- Measurement must be multi-dimensional: Traditional ROI misses the biggest wins (cost avoidance, back-office efficiency)

So What Now?

I started digging into this research because I kept running into the same frustrations; projects that stalled for reasons that had nothing to do with the models, teams that pushed back on tools nobody explained to them, success metrics that felt disconnected from actual outcomes. Reading through these studies helped me put words to patterns I'd only half-understood. I don't have all the answers, but I'm more convinced now that the path forward has less to do with AI and more to do with how we bring people along.

References

- McKinsey & Company. (2025). The State of AI in 2025.

- MIT / Fortune. (2025). Why 95% of AI Pilots Fail.

- Boston Consulting Group. (2024). AI Adoption: Companies Struggle to Scale Value.

- RAND Corporation. (2024). Root Causes of Failure for AI Projects.

- Kyndryl. (2025). People Readiness Report.

- IBM. (2025). 2025 CEO Study.

- IBM. (2025). Race for ROI - EMEA Report.

- Gartner. (2024). GenAI is Most Deployed AI Solution.

- Gartner. (2023). AI TRiSM Framework.

- OECD. (2025). AI Adoption Among SMEs.

- Precisely & Drexel University. (2025). Data Integrity Trends.

- KPMG. (2025). AI Quarterly Pulse Survey Q3.

- Deloitte TrustID / HBR. (2025). Workers Don't Trust AI.

- Menlo Ventures. (2025). The State of Generative AI in the Enterprise.

If you're working on similar problems or have thoughts on this, reach out on GitHub, LinkedIn, or via email. I'd love to hear your thoughts!